As part of my move to IPv6-only networking, I took some time to re-evaluate the functions and configurations of my VM hosts with the aim of greater segregation between workloads, more predictable failure scenarios, and making sure my IPv6 addressing plan is up to scratch. (I also have one VM host still running Xen that I'm migrating to KVM.)

Two distinct use cases

In thinking about the network topologies used in these VM hosts, I realised I had two use cases with slightly different topology requirements:

- VMs which service clients on a LAN (e.g. firewalls, DHCP servers, SMB file servers) - these need layer 2 access in order to send and receive broadcast/multicast traffic or provide routing functionality.

- VMs which provide services which need only a TCP or UDP connection (e.g. DNS, HTTPS, or NTP servers) - generally these could be hosted anywhere and aren't limited by needing layer 2 access to clients. These services make up the vast majority of what we see in modern enterprise microservice architectures.

There's not a clear distinction between these two use cases and there may be some overlap - for example, it is possible to use DHCP or SMB without direct layer 2 access, and a host might be both a DNS and a DHCP server for the same clients. But the services in use case #2 give a little more flexibility regarding their placement in the network, meaning that tighter micro-segmentation can be applied.

Common options for network topologies within a KVM host are:

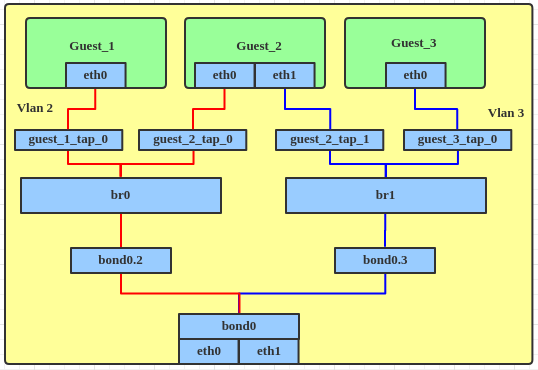

Bridge-per-VLAN

This is the traditional method for isolating multiple guests on a VM host using VLANs. Figure 1 (from Red Hat's developer blog) shows this approach, which requires a separate bridge for each different VLAN needed on the host. If a guest needs to connect to multiple VLANs (e.g. Guest_2 in Figure 1), additional virtual NICs are required for each additional VLAN). This topology works fine, but it has a lot of moving parts and both the host and guest networking feel more complex than they need to be.

Figure 1: Traditional bridge-per-VLAN topology

Open vSwitch

Open vSwitch is a highly capable, multi-host software switch which can support almost any network design. I briefly investigated it, but decided against it because of the learning curve. More importantly, it didn't solve the issue of client separation in my second use case more elegantly than traditional Linux bridges - one would still need a dedicated VLAN for every VM in order to provide full isolation between individual guests. If I worked with Open vSwitch in my day job I would likely have used it without even thinking about the decision.

Bridge VLAN filtering

The previously mentioned Red Hat Developer blog post explains another option which has been present in the Linux kernel since version 3.8: bridge VLAN filtering. This feature allows multiple VLANs to be defined on a bridge and then host and guest ports to be assigned to one or more of those VLANs. This means that a simpler topology can be deployed, involving only one bridge on the host. It's easy to deploy and seems very robust in my experience so far.

Point-to-point virtual Ethernet

Another less well-known topology is that of point-to-point virtual Ethernet (veth pairs). These are actually deployed behind the scenes whenever guest VMs are linked to Linux bridges - one end of the pair is linked to the host bridge, and the other is assigned to the virtual NIC in the guest. If my memory serves me correctly, Open vSwitch does the same or very similar (please reach out if I'm misremembering this - I can't place my hands on the substantiating documentation at the moment). If the host is used as a firewall/router and high levels of client separation are desired, this is actually the simplest possible topology.

Horses for courses

If it isn't obvious by now, I decided that bridge VLAN filtering was the right choice for my first use case, and that point-to-point virtual Ethernet was the better fit for my second use case. I have VLAN filtering deployed on two KVM hosts and point-to-point Ethernet on a third. I'll go into more detail on both in future posts.

Overlays

A quick aside on overlay networks to wrap up: I'm sure it would have been possible to use overlay networks like VXLAN to deal with both of these use cases, but I didn't bother investigating. One of the main problems they were designed to solve is scalability, and they trade that off by adding complexity. Since TLNTC doesn't have a scalability problem, and simplicity is one of its core values, they ruled themselves out.

Additionally, overlays generally emulate layer 2 over layer 4, and like some network engineers I have a (possibly irrational) dislike of layer 2 generally and a corresponding fascination with routing. Being able to view IPv4 /32 or IPv6 /128 routes for individual hosts in one unified routing table means that I can quickly and easily gauge whether each VM host and guest is working.